So, what else “vmkvsitools” can do?. Although, I haven’t finished yet exploring all the parameters, you can try some of the commands as I listed below :-

Usage: ‘vmkvsitools CMD option’

CMD: amldump, bootOption, hwclock, hwinfo, lsof, lspci, pci-info, pidof, ps, vdf, vdu, vmksystemswap, vmware

eg1. “$vmkvsitools CMD -h” -> get help

eg2. “$vmkvsitools hwinfo -p” -> print all pci device info present

eg3. “$vmkvsitools hwinfo -h” -> Print usage

eg4. “$vmkvsitools lspci -p” -> print details of pci device

eg5. “$vmkvsitools vmware -v” vmkernel version

eg6. “$vmkvsitools vmware -l” Print release level

eg7. “vmkvsitools hwclock -d 10/07/2010 -t 00:33:00″ Set date & time

eg6. “vmkvsitools ps -c” Print process ID (PID) with verbose command aka ps auxwww

Well, just give a try because too much to explain here. Remember “$vmkvsitools CMD -h” for help.

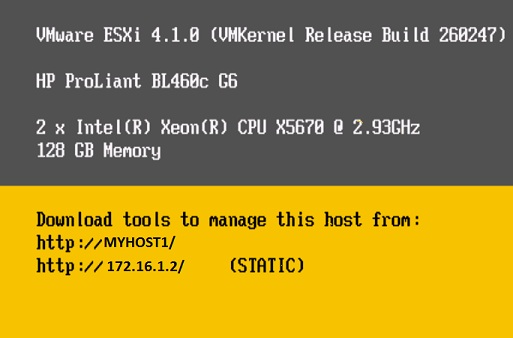

How to connect the below window from command line ?

~#cd /usr/sbin...............>

/sbin # dcui/

What is amldump?

~ # amldump

Writing file DSDT.aml

Writing file FACS.aml

Writing file FACP.aml

Writing file SSDT.aml

Writing file MSDM.aml

Writing file HPET.aml

Writing file MCFG.aml

Writing file TAMG.aml

Writing file APIC.aml

~ #

~#cd /usr/sbin...............>

/sbin # dcui/

What is amldump?

~ # amldump

Writing file DSDT.aml

Writing file FACS.aml

Writing file FACP.aml

Writing file SSDT.aml

Writing file MSDM.aml

Writing file HPET.aml

Writing file MCFG.aml

Writing file TAMG.aml

Writing file APIC.aml

~ #

Regulary which is used for ESX/ESXI Server in Core level for background process of Operating system

techsupport.sh

vmkdump_extract

amldump

ntpd

vmkerrcodeapply-host-profiles

vmkeventd

applyHostProfile

applyHostProfile

vmkfstools

esxtop

esxtop

vmkiscsi-tool

auto-backup.sh

auto-backup.sh

esxupdate

partedUtil

vmkiscsid

backup.sh

backup.sh

ethtool

pidof

chkconfig

firmwareConfig.sh

vmkmkdev

dcbd

ft-stats

randomSeed

vmkperfdcui

generate-certificates

vmkramdisk

df

vmkramdisk

df

hbrfilterctl

scantools

vmksystemswap

dmesg

dmesg

sensord

vmtar

doat

doat

hwclock

vmware-autostart.shhwinfo

sfcbd

vmware-usbarbitratorshutdown.sh

esxcfg-dumppart

esxcfg-dumppart

smbiosDump

vscsiStatsesxcfg-fcoe watchdog.sh

logchannellogger

storageRM

lspci

tmpwatch.py

lsusb

lsusb

traceroute

uptime

memstats

uptime

memstats

vm-support

net-dvs

net-dvs

vmdumper

net-fence

net-fence

vmfs-support

net-lbt

net-lbt

vmkbacktrace

netlogond

netlogond

vmkchdev

Session.py

Session.py

vmkdevmgr

ntp-keygen

ntp-keygen

localcli

statedumper

vmkload_mod

bootOption

bootOption

fdisk

vmkmicrocode